There's a type of pain a brain will suffer only from mathematics, and I had a splitting headache of that kind in high school once as I struggled with some concept I couldn't quite master. I was good with numbers at that time, in the most advanced classes and really enjoying it -- but when I approached the teacher for help I was summarily turned away, I don't know why. Maybe the guy was having a bad day. But I needed help and wasn't getting it, so I finished that class as best I could and didn't take another, for a long long time. And that was too bad -- because although math does hurt, it's really really cool.

Recently I took a course called Mental Math out of Harvey Mudd, with Professor Arthur Benjamin through The Great Courses, an online business that is remarkably solid. It was the tenth course I've taken through TGC and one of the best. As it turns out, these skills are so useful I wish I had them long ago. Benjamin starts most of his 12 lectures by demonstrating, to a live audience, some pretty impressive feats. And then he breaks them down into digestible parts.

Some of the lessons were more or less common sense. Like breaking down a problem like 19*32 to (20*32) - 32. That's 608. Or that multiplying a single digit by 9 results in a product starting one less than the digit followed by its complement to 9. 9*8=72 9*3=27 and so on.

Some lessons were approximate. If you want to check the order of magnitude of a multiplication answer, add the number of digits on both sides. Your product will have that many digits or one fewer. Which? If the product of the two largest place digits is 10 or more, it'll be the sum of the digits. If it's four or less, definitely the sum minus one. Example: 6475*480. Since 6*4 >=10, the product has the full 7 digits. If we multiply 1234 * 298, since 1*2 <=4, the product will have 6 digits. Check it, it's true.

Multiplying by 11 is freaky easy. 11*54=594 .. that 5 and 4 look familiar? The 9 is the sum of them. 11*32=352. 11*18=198. That is, 11*AB=A A+B B and it works for large numbers too! 11 * ABCDE is A A+B B+C C+D D+E and E -- 11*2345= 25,795. It only gets a little tricky when the two numbers add to more than 9, like 11*789, in which case it's 7 7+8 8+9 9 or 7 15 17 9. Carry the 1's right to left and you easily see 8,679. That's pretty much it for 11zies.

It turns out the way I learned multiplication is the most drawn-out way to do it. Here's another way, the Criss Cross method: take a small one first: 21*47. Right to left it's 1*7 = SEVEN. (1*4)+(2*7)=18, that's EIGHT, carry a 1. This is followed by 4*2 [plus the carried 1] is NINE, so the answer is 987. Here's about as bad as it gets, with more carrying: 37*62. 7*2=14, FOUR carry the 1. [ (7*6=42)+(3*2=6)]=48, plus the carried 1 =49 that's NINE carry the 4. Finally, 3*6=18 plus the carried 4= 22 ... so the answer is 2294. In other words AB*CD is AC, [(B*C)+(A*D)], then B*D, with a little bit of carrying. For bigger numbers this works, too, but you'll probably need paper ... I'll show you ABCD*EFGH. Start with the ones: D*H, then the next product digit moving left comes from (C*H)+(D*G). Then BH+CG+DF. Then AH+BG+CF+DE, then AG+BF+CE, then AF+BE, then AE. Yes it's a chore but all you write down is the answer.

It turns out the way I learned multiplication is the most drawn-out way to do it. Here's another way, the Criss Cross method: take a small one first: 21*47. Right to left it's 1*7 = SEVEN. (1*4)+(2*7)=18, that's EIGHT, carry a 1. This is followed by 4*2 [plus the carried 1] is NINE, so the answer is 987. Here's about as bad as it gets, with more carrying: 37*62. 7*2=14, FOUR carry the 1. [ (7*6=42)+(3*2=6)]=48, plus the carried 1 =49 that's NINE carry the 4. Finally, 3*6=18 plus the carried 4= 22 ... so the answer is 2294. In other words AB*CD is AC, [(B*C)+(A*D)], then B*D, with a little bit of carrying. For bigger numbers this works, too, but you'll probably need paper ... I'll show you ABCD*EFGH. Start with the ones: D*H, then the next product digit moving left comes from (C*H)+(D*G). Then BH+CG+DF. Then AH+BG+CF+DE, then AG+BF+CE, then AF+BE, then AE. Yes it's a chore but all you write down is the answer. Here's another way when the two digit numbers are anywhere near close together. Let's start easy: 43*42. Look for a nice tens number nearby (40). 43 is +3 from 40 and 42 is +2 away. Take 43 and

Here's another way when the two digit numbers are anywhere near close together. Let's start easy: 43*42. Look for a nice tens number nearby (40). 43 is +3 from 40 and 42 is +2 away. Take 43 and add the +2 (from the 42-40), OR the 42 plus the =3. Either way you get 45. Now 45*40 is easy to figure in your head ... as easy as 45*4, it's 1800. Then just add the multiplication of the two adjusters: 2*3. So the answer is 1806. The same thing works when one or both numbers are below the target tenzie. Try 28*34. It's just 30*32=960 ... plus (-2*4) ... 952. See, I added 4 to 28 or subtracted 2 from 34 to get the 32 to multiply by 30. If both numbers are below the target, you add their product of course, because a negative times a negative is a positive. Example: 38*36 = (34*40)+(-2*-4) = 1360+8=1368.

If you're multiplying two numbers that start the same, and the last two numbers add to 10 there's a super easy way. 67*63, take the 6 and multiply by (6+1), then concatenate the product of 7 and 3. That's (6*7),(7*3) ... 4221.

To multiply any two numbers between 10 and 20, you can do it this way. Say 17*14. Take 17+4=21, times 10 (210) then add 7*4 ... it's 238. It works either way of course -- you could go 14*17, 14+7=21, times 10 and add the same 4*7.

To multiply any two numbers between 10 and 20, you can do it this way. Say 17*14. Take 17+4=21, times 10 (210) then add 7*4 ... it's 238. It works either way of course -- you could go 14*17, 14+7=21, times 10 and add the same 4*7.

When it comes to division, I learned the old long division, which is just a waste of time when the divisor is small because in that case short division on paper is just the same and so much quicker. Why write all those numbers? If you want to get the order of magnitude of your quotient correct, the length of the answer is the difference in length of the number and divisor, or sometimes that +1 digit. To determine whether to add the 1 digit just compare the two largest digits. If that for the number you're dividing is smaller, it'll be just the difference in digits. E.g., 6543/739, because the 6 is smaller than the 7 it will yield a 1 digit number (8.84...); but the result of 5439/284 will be two digits (19.15...). Because 5 is larger than 2.

If you want to know if something is divisible by 2 just look at the one=place digit, everyone knows that. 6748 is, 7643 isn't. But it'll be divisible by 3 if the sum of all the digits are a multiple of 3. Try 3471, that adds to 15, so 3471 divides neatly by 3. A number is divisible by 4 if the last two digits are divisible by 4. By 5 if the last digit is 5 or 0, of course. By six if it's divisible by both 2 and 3 (see above). 7 is the most complicated: Take off the last digit, double it, and subtract it from the rest. E.g., 112? 2 doubled is 4 and 11-4=7. Since that's a multiple of 7, 112 is too. It's divisible by 8 if the last three digits are divisible by 8. Nine is like three; sum the digits and if that is a multiple of 9, you're good.

Another way to do 7 is this. By example, take 1234. Add or subtract a multiple of 7 to get to get a 0 in the one's place: 1234-14=1220, then kill the 0: 122. Do it again: 122+28=150, and kill the 0. 15 is not divisible by 7, so neither is 1234. This trick works for any number ending in 1, 3, 7, or 9. Is 1472 divisible by 23? Well, 1472-92=1380 ... 138+92 =230. Kill the 0 and you get 23. So yes it is.

Besides being fun, there's some immediately practical information in this course. To calculate change just take the complement which adds to 9 for every digit except the last one, which adds to 10. So pay $10 for something that costs $1.32? $8.68 change. Costs $6.98? It's $3.02. Just add another $10 if you paid with a $20 bill. I now can beat just about any checkout clerk.

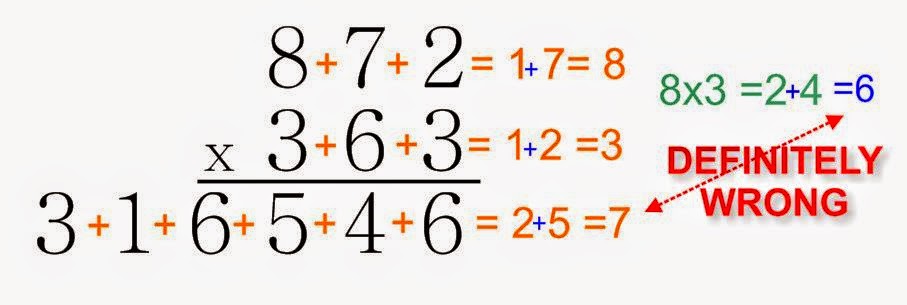

Here's another really freaky cool trick. Let's say you multiplied two very large numbers: 1,246*35,791=44,595,586 . To check your work add 1, 2, 4, 6= 13, then add that 1 and 3, to get 4. Then add the 3, 5 , 7, 9, 1 that's 25, and 2+5=7. Then since you're multiplying, multiply the 7*4=28, the 2+8=10 and the 1+0 =1. That's a lot of collapsing, but it's worth it. Compare this to the sum of 4, 4, 5, 9, 5, 5, 8, 6= 46 and 4+6=10 and 1+0 =1. If the numbers match the the answer is most probably right! If the numbers don't match, it's certainly wrong, like this: Does 27*43= 1151? Well, 9*7=63 and 6+3=9 ... and the digits of 1151 add to 8. So it's WRONG, for sure. This trick works for subtraction just the same.

Squaring two-digit numbers that end in 5 is easy because the product always ends in 25. Take 85 squared. 8*9=72, so it's 7225. X5squared is [X*(X+1)] and tack on 25. Wala. Works for multi-digit numbers too.

Vedic Division is really pretty extraordinary. It works best when dividing by a 2-digit number that ends in 9 or another high number. Say, 47869/49. You change the 49 to 50 and just divide by 5, working left to right. 47/5 is 9, remainder 2. But because we fudged a bit to get 5, instead of dividing 28 (the carried 2 and the next digit, 8) by 5 you first add the 9 from the quotient to 28, so the next is 37/5 =7R2, then (26+7=33)/5=6R3 and 39+6=45 ... so the answer is 976 R45. If your divisor ends in 8 then double the previous digit in the quotient; if 7, triple it. If it ends in 1, subtract the previous quotient; if in 2, subtract it twice. If that's not clear, buy the lectures. They're worth it, I assure you.

One of the most fun lessons was figuring out the day of the week for any date. You have to memorize a few things, like add 1 for the 1900s, 3 for the 1800s, 5 for anything in the 1700's and 0 for the 1600s or 2000s. And you have to memorize a number among 0-6 for each month. January is 6, Feb 2, M 2, A 5, M 0, Ju 3, Jul 5, A 1, S 4, O 6, N 2, Dec. 4. Pure memorization, though there are tricks you can use. Then you do it this way. Let's say Feb 12 1809 -- Charles Darwin's birthday. 3 for the 1800s plus 2 because there were two leap years by '09 (9/4, throw out the remainder), then add the 9 itself ... 14. For Feb add 2, that's 16. Then 12 for the day, we're at 28. Divide by 7, the R is what we're looking for. 28/7=4R0. You start at Sunday with 0, Monday as 1, through Saturday (6). Darwin was born on a Sunday. This gets easier when you start dropping any multiple of 7 at any time, and drop any multiple of 28 years between the years 1901 and 2099. Nov. 6 1975? It's 75/4=18 leap years and 75-56 (28*2) is 19. 19+18 is 37, drop the 7s, that leaves 2. Add another 2 for Nov and 6 for the 6th, and 1 for the 1990s. That's 11. Drop a 7 and it's 4, that's Thursday. If you're early in the millennium, it's real easy. What day is July 4 in 2015? 27/7 =3R6 Saturday.

There are just a couple of twists. On leap years subtract 1 from the months Jan and Feb, so they are 5, and 1 respectively. And this astronomical correction I was not even aware of: any year ending in 00 does not leap, unless it ends in 400 -- then it does.

Many of these tricks, if you actually do them in your head, require holding numbers while you work on others. That gets confusing. The Major System helps, because it converts numbers to letters. You basically read numerals like the alphabet instead. 1=T/D/Th, 2=N, 3=M, 4=R, 5=L, 6=G/sh/ch, 7=K/hard G, 8=F/V, 9=P/B, 0=S/Z Words are much easier to remember than strings of digits, and when you have to keep both in your head, they are less likely to get confused. I've been using the Major System for awhile, so I was gratified when Benjamin recommended it.

I've combed through much of my notes for this little summary -- but there's more, and Professor Benjamin will explain it much betters. He took me to a few places, near the end, which almost started up that old headache again. But it was worth it. I recommend the video version of the course, not the audio, because there is quite a lot of visualization. If you buy it, wait for a sale, and you'll need a notebook. Benjamin is earnest, enthusiastic, well paced, and clear. He gives excellent examples, explains why these things work, and demonstrates almost inhuman mastery of these skills, sometimes thinking aloud so you can see his process. It's so much fun. Here's a link: http://www.thegreatcourses.com/courses/secrets-of-mental-math.html